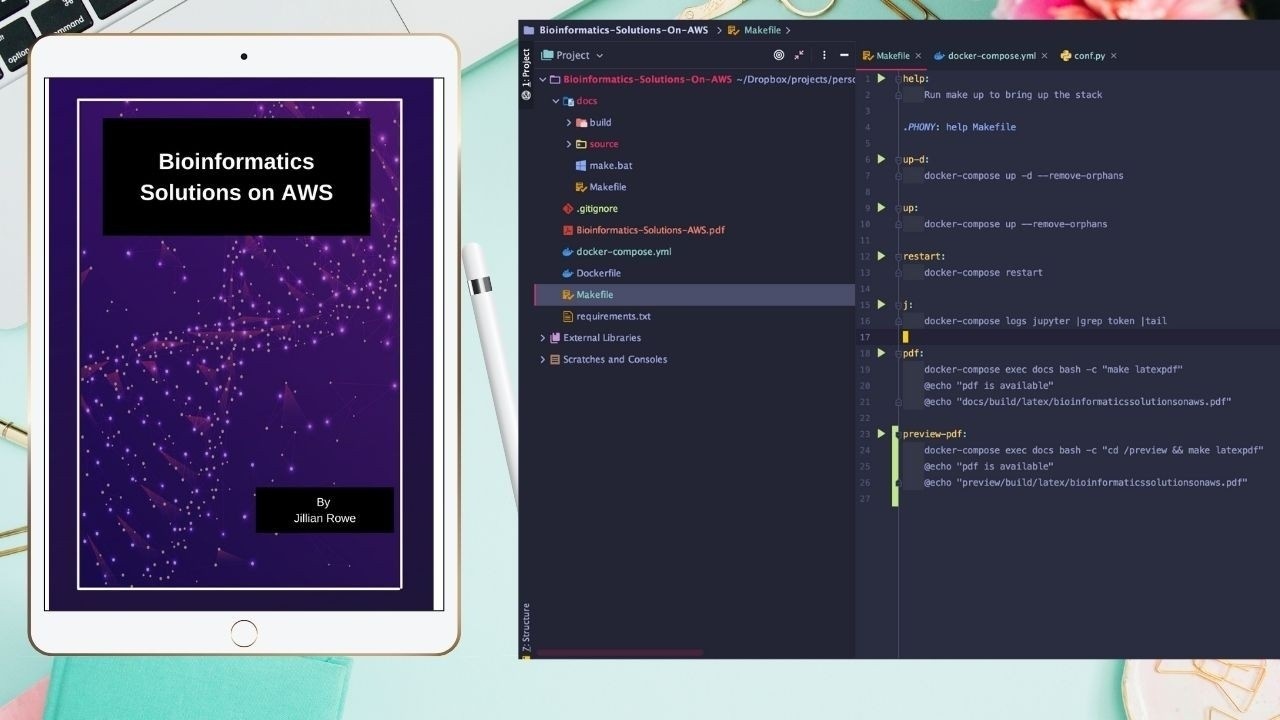

Bioinformatics Solutions on AWS

Bioinformatics Solutions on AWS Book - Preview

Apr 17, 2021

Continue Reading...

This is the introductory chapter to my new book, Bioinformatics Solutions on AWS.

(I am aware

...

Dask on HPC

Sep 26, 2019

Continue Reading...

Recently I saw that Dask, a distributed Python library, created some really handy wrappers for

...Bioinformatics Solutions on AWS Newsletter

Get the first 3 chapters of my book, Bioinformatics Solutions on AWS, as well as weekly updates on the world of Bioinformatics and Cloud Computing, completely free, by filling out the form next to this text.

Bioinformatics Solutions on AWS

If you'd like to learn more about AWS and how it relates to the future of Bioinformatics, sign up here.

We won't send spam. Unsubscribe at any time.