Deploy Dash with Helm on Kubernetes with AWS EKS

Jul 14, 2020

Today we are going to be talking about Deploying a Dash App on Kubernetes with a Helm Chart using the AWS Managed Kubernetes Service EKS.

For this post, I'm going to assume that you have an EKS cluster up and running because I want to focus more on the strategy behind a real-time data visualization platform. If you don't, please check out my detailed project template for building AWS EKS Clusters.

Dash is a data visualization platform written in Python.

Dash is the most downloaded, trusted framework for building ML & data science web apps.

Dash empowers teams to build data science and ML apps that put the power of Python, R, and Julia in the hands of business users. Full stack apps that would typically require a front-end, backend, and dev ops team can now be built and deployed in hours by data scientists with Dash. https://plotly.com/dash/

If you'd like to know what the Dash people say about Dash on Kubernetes you can read all about that here.

Pretty much though, Dash is stateless, meaning you can throw it behind a load balancer and DOMINATE. Then, because Kubernetes is taking over the world, can load balance, and can autoscale applications along with the cluster itself being elastic, Dash + Kubernetes is a pretty perfect match!

Data Visualization Infrastructure

Historical View

I've been studying Dash as a part of my ongoing fascination with real-time data visualization of large scale genomic data sets. I suppose other datasets too, but I like biology so here we are.

I think we are reaching new capabilities data visualization capabilities with all the shiny new infrastructure out there. When I started off in Bioinformatics all the best data viz apps were desktop applications written in Java. If you'd like to see an example go check out Integrate Genome Browser - IGV.

There are definite downsides to desktop applications. They just don't scale for the ever-increasing amount and resolution of data. With this approach, there is a limit to the amount of data you can visualize in real-time.

Then there's the approach to set up pipelines that output a bunch of jpegs or PDFs and maybe wrapping them into some HTML report. This works well enough, assuming your pipeline is working as expected but doesn't allow you to update parameters or generally peruse your data in real-time.

Fancy New Data Viz Apps

We're no longer limited to a desktop app to get the level of interactivity we're all looking for when interacting with data. Frameworks such as RShiny and Dash, and because they do such a nice job of integrating server-side computing with front end widgetizing (is that a word?) you can really think of how to scale your applications.

Compute Infrastructure and Scaling

Now, with all sorts of neat cloud computing, we can have automatically scaling clusters. You can say things like "hey, Kubernetes, when any one of my pods is above 50% CPU throw some other pods on there".

Python and R both have very nice data science ecosystems. You can scale your Python apps with Dask and/or Spark, and your R apps with Spark by completely separating out your web stuff with Dash/Shiny from your heavy-duty computation with Dask/Spark.

If you'd like to read more about how I feel about Dask go check out my blog post Deploy and Scale your Dask Cluster with Kubernetes. (As a side note you can install the Dask chart as is alongside your Dash app and then you can use both!)

Then of course you can throw some networked file storage up in Kubernetes cluster, because seriously no matter how fancy my life gets I'm still using bash, ssh, and networked file storage!

Let's Build!

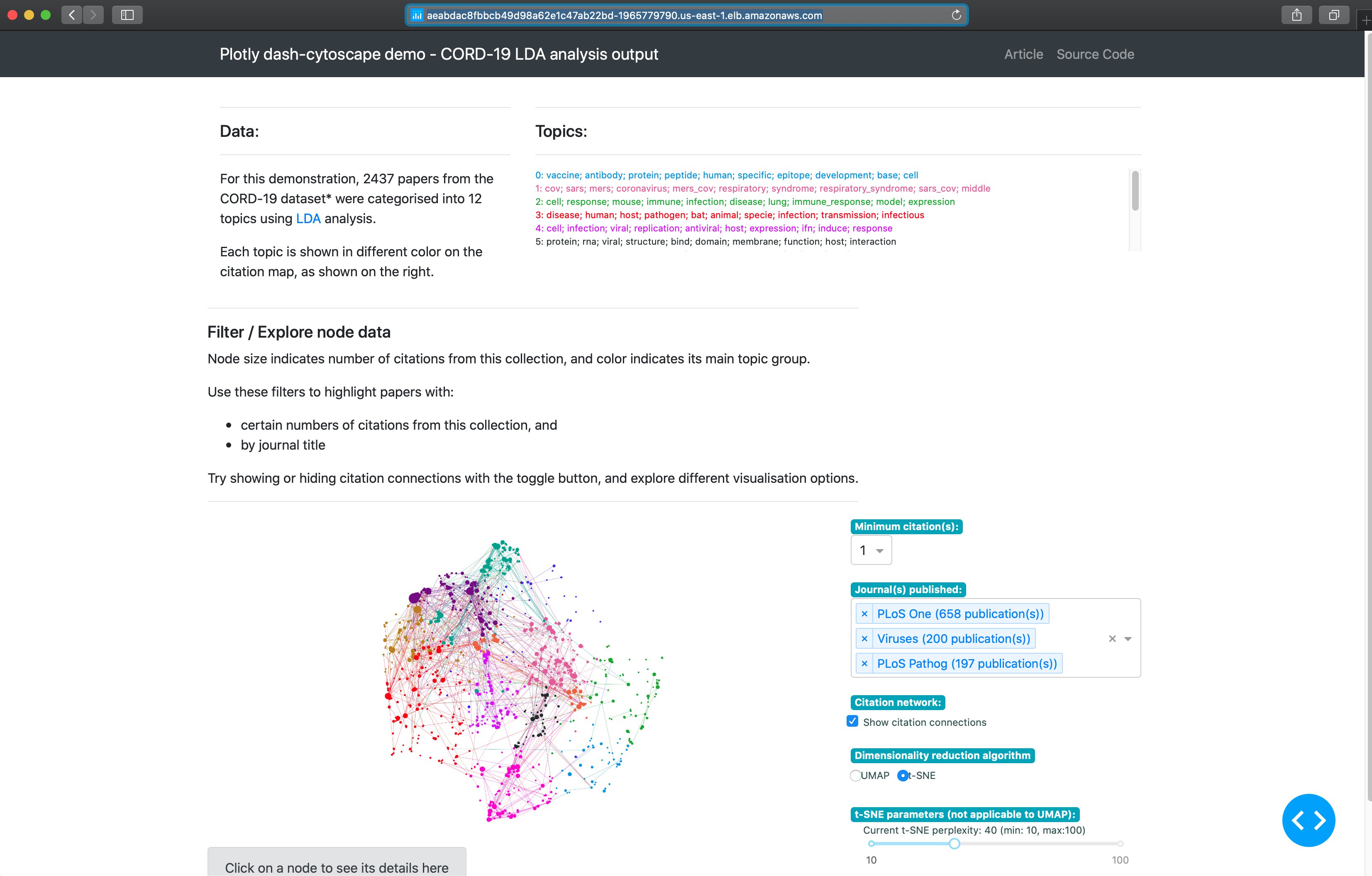

We're using this app. I did not write this app, so many thanks to the Plotly Sample apps! ;-)

I've already built it into a docker container and this post is going to focus on the Helm Chart and Kubernetes Deployment.

Customize the Bitnami/NGINX Helm Chart

I base most of my Helm Charts off of the NGINX Bitnami Helm chart. Today is no exception. I will be changing a few things though!

Then, for the sake of brevity, I'm going to leave out all the metrics-server and load from git niceness. I actually like these and include them in my production deployments, but its kind of overkill for just throwing a Dash app up on AWS EKS. If you're working towards a production instance I'd recommend going through the bitnami/nginx helm chart and seeing what all they have there, particularly for metrics along with replacing the default Ingress with the NGINX Ingress.

We're not going to be modifying any files today. Instead, we will abuse the power of the --set flag in the helm CLI.

A quick note about SSL

Getting into SSL is a bit beyond the scope of this tutorial, but here are two resources to get you started.

The first is a Digital Ocean tutorial on securing your application with the NGINX Ingress. I recommend giving this article a thorough read as this will give you a very good conceptual understanding of setting up https. In order to do this, you will need to switch the default Ingress with the NGINX Ingress.

The second is an article by Bitnami. It is a very clear tutorial on using helm charts to get up and running with HTTPS, and I think it does an excellent job of walking you through the steps as simply as possible.

If you don't care about understanding the ins and outs of https with Kubernetes just go with the Bitnami tutorial. ;-)

Install the Helm Chart

Let's install the Helm Chart. First off, we'll start with the simplest configuration in order to test that nothing too strange is happening.

# Install the bitnami/nginx helm chart with a RELEASE_NAME dash

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo update

helm upgrade --install dash bitnami/nginx \

--set image.repository="jerowe/dash-sample-app-dash-cytoscape-lda" \

--set image.tag="1.0" \

--set containerPort=8050Get the Dash Service

Alrighty. So you can either pay attention to what the notes say, get the notes, or use the Kubernetes CLI (kubectl)

Use Helm

This assumes that the information you need is actually in the Helm notes.

helm get notes dashThis should spit out:

export SERVICE_IP=$(kubectl get svc --namespace default dash-nginx --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")

echo "NGINX URL: http://$SERVICE_IP/"If you're on AWS you'll see something similar to this:

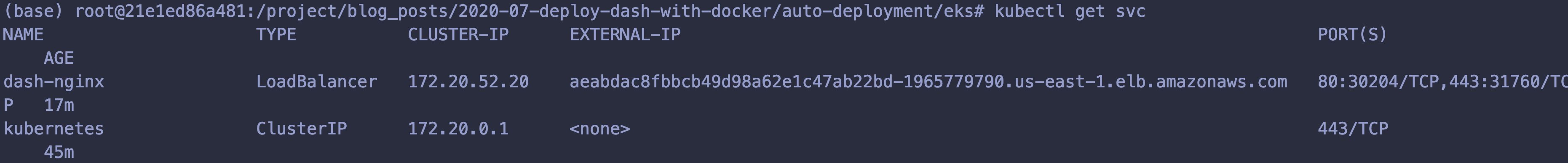

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dash-nginx LoadBalancer 172.20.48.151 acf3379ed0fcb4790afc8036310259dc-994191965.us-east-1.elb.amazonaws.com 80:31019/TCP,443:32684/TCP 18m

kubernetes ClusterIP 172.20.0.1 <none> 443/TCP 76mUse kubectl to get the SVC

You can also just run

kubectl get svc | grep dashOr you can use the JSON output to programmatically grab your SVC. This is handy if you want to update it with a CI/CD service.

export EXPOSED_URL=$(kubectl get svc --namespace default dash-nginx-ingress-controller -o json | jq -r '.status.loadBalancer.ingress[]?.hostname')

Check out Dash on AWS!

Grab that URL and check out our Dash App!

SCALE

Now, this is where things get fun! We can scale the application, dynamically or manually. The helm chart is already setup to work as a Load Balancer, so we can have 1 dash app or as many dash apps as we have compute power for. The Load Balancer will take care of serving all these under a single URL in a way that's completely hidden from the end user.

Manually Scale the Number of Dash Apps Running

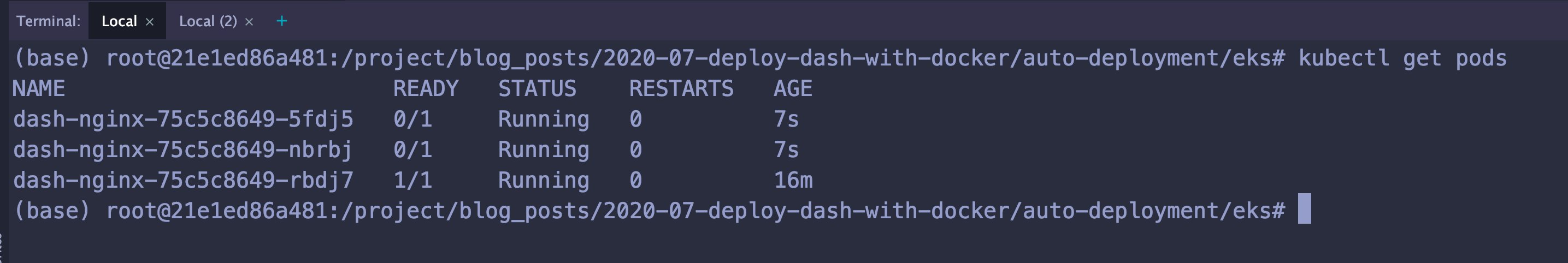

First, we're going to manually scale the number of Dash Apps running by increasing the number of replicas. This is pretty standard in web land.

helm upgrade --install dash bitnami/nginx \

--set image.repository="jerowe/dash-sample-app-dash-cytoscape-lda" \

--set image.tag="1.0" \

--set containerPort=8050 \

--set replicaCount=3Now when you run kubectl get pods you should see 3 dash-nginx-* pods.

Then, when you run kubectl get svc you'll see that there is still one LoadBalancer service for the Dash App.

That's the most straight forward way to statically scale your app. Let's bring it back down, because next we're going to dynamically scale it!

helm upgrade --install dash bitnami/nginx \

--set image.repository="jerowe/dash-sample-app-dash-cytoscape-lda" \

--set image.tag="1.0" \

--set containerPort=8050 \

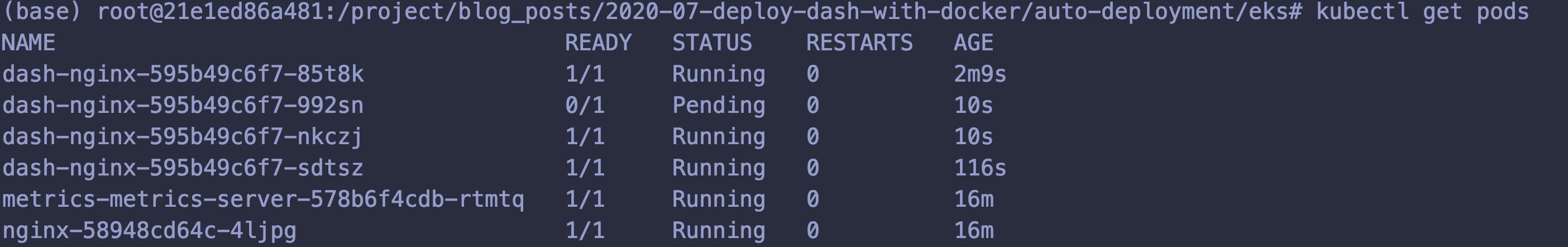

--set replicaCount=1Dynamically Scale your Dask App with a Horizontal Pod AutoScaler

The Kubernetes Horizontal Pod Autoscaler allows you dynamically scale your application based on the CPU or Memory load on the pod. Pretty much you set a rule, say that once a rule reaches some percent of total CPU to increase the number of pods.

This is kind of tricky for people, so I'm going to show the code from the Nginx helm chart.

Here it is in the values.yaml.

# https://github.com/bitnami/charts/blob/master/bitnami/nginx/values.yaml#L497

## Autoscaling parameters

##

autoscaling:

enabled: false

# minReplicas: 1

# maxReplicas: 10

# targetCPU: 50

# targetMemory: 50And here it is in the templates/hpa.yaml.

# https://github.com/bitnami/charts/blob/master/bitnami/nginx/templates/hpa.yaml

{{- if .Values.autoscaling.enabled }}

apiVersion: autoscaling/v2beta1

kind: HorizontalPodAutoscaler

metadata:

name: {{ template "nginx.fullname" . }}

labels: {{- include "nginx.labels" . | nindent 4 }}

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: {{ template "nginx.fullname" . }}

minReplicas: {{ .Values.autoscaling.minReplicas }}

maxReplicas: {{ .Values.autoscaling.maxReplicas }}

metrics:

{{- if .Values.autoscaling.targetCPU }}

- type: Resource

resource:

name: cpu

targetAverageUtilization: {{ .Values.autoscaling.targetCPU }}

{{- end }}

{{- if .Values.autoscaling.targetMemory }}

- type: Resource

resource:

name: memory

targetAverageUtilization: {{ .Values.autoscaling.targetMemory }}

{{- end }}

{{- end }}You set your minimum number of replicas, your maximum number of replicas, and the amount of Memory/CPU you're targeting.

Now, this is a blog post and not particularly indicative of the real world. I'm going to show you some values so this is interesting, but really when you're doing your own autoscaling you'll have to play around with this.

First, we'll have to install the metrics server.

helm repo add stable https://kubernetes-charts.storage.googleapis.com

helm repo update

helm install metrics stable/metrics-serverIf you get an error here about metrics already taken you probably have the metrics chart installed already. It's installed by default on a lot of platforms or may have been required by another chart.

In order to get this to work you need to set limits on the resources of the deployment itself so Kubernetes has a baseline for scaling.

I'm using a t2.medium instance for my worker groups, which has 2 CPUs, 24 CPU credits/hour, and 4GB of memory. You should be fine if you're on a larger instance, but if you're on a smaller you may need to play with the resource values.

helm upgrade --install dash bitnami/nginx \

--set image.repository="jerowe/dash-sample-app-dash-cytoscape-lda" \

--set image.tag="1.0" \

--set autoscaling.enabled=true \

--set autoscaling.minReplicas=2 \

--set autoscaling.maxReplicas=3 \

--set autoscaling.targetCPU=1 \

--set resources.limits.cpu="200m" \

--set resources.limits.memory="200m" \

--set resources.requests.cpu="200m" \

--set resources.requests.memory="200m" \

--set containerPort=8050We should see, at a minimum, 2 pods (or 1 pod up with one scaling up).

kubectl get pods

Then we can describe our HPA.

kubectl get hpa

kubectl describe hpa dash-nginxI set the resource requirements very low, so grab your SVC and just keep refreshing the page. You should see the pods start scaling up.

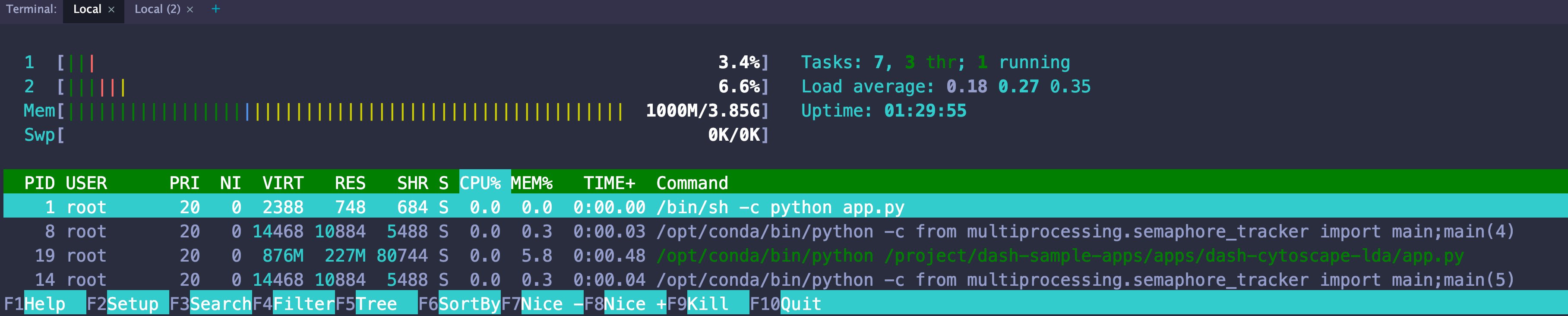

kubectl get svc |grep dashFigure out your Autoscaling Values with htop

Now, what you should do is to install Prometheus/Grafana and use the metrics server to keep track of what's happening on your cluster.

But, sometimes you just don't want to be bothered you can always just exec into a Kubernetes pod and run htop.

kubectl get pods |grep dash

# grab the POD_NAME - something like this dash-nginx-75c5c8649-rbdj7

kubectl exec -it POD_NAME bashThen, depending on when you read this you may need to install htop.

apt-get install -y htop

htop

Wrap Up

That's it! I hope you see how you can gradually build up your data science and visualization infrastructure, piece by piece, in order to do real-time data visualization of large data sets!

If you have any questions, comments, or tutorials requests please reach out to me at [email protected], or leave a comment below. ;-)

Helpful Commands

Here are some helpful commands for navigating your Dash deployment on Kubernetes.

Helm Helpful Commands

# List the helm releases

helm list

# Add the Bitnami Repo

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo update

# Install a helm release from a helm chart (the nice way to integrate with CI/CD)

helm upgrade --install RELEASE_NAME bitnami/nginx

# Install a helm chart from a local filesystem

helm upgrade --install RELEASE_NAME ./path/to/folder/with/values.yaml

# Get the notes

helm get notes RELEASE_NAME Kubectl Helpful Commands

# Get a list of all running pods

kubectl get pods

# Describe the pod. This is very useful for troubleshooting!

kubectl describe pod PODNAME

# Drop into a shell in your pod. It's like docker run.

kubectl exec -it PODNAME bash

# Get the logs from stdout on your pod

kubectl logs PODNAME

# Get all the services and urls

kubectl get svc